This is an old revision of the document!

Stability testing tools for model tasks

During the Stable Release project, tasks were added to the CAPRI system that are aiding in testing the coherence and stability of the entire system. Those tasks are accessible from the GUI in the work step “Tests”. Those tasks help developers running the model with different settings or with different starting values and systematically comparing the results.

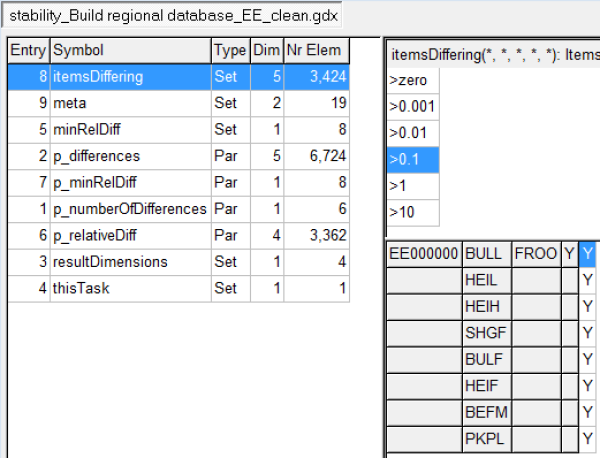

The central task is a somewhat refined version of the GDXDIFF facility built into GAMS. It is called “Compare task results”. It loads the results from two executions of the same task, e.g. two simulations with somewhat different settings, and compares all the results of the main result parameter. Differences are put in relation to the absolute number in the first file, and are then sorted into classes of a cumulative distribution, counting the number of differences in each class. The results are stored in the sub directory “test” in the standard result directory, with the name pattern “stability_”<task name><additional result type identifier>.gdx. It is advisable to use the Additional result type identifier (on the debug options tab) to give a hint to the nature of the experiment (e.g. “_restart”).

For instance, running the task “Build regional database” twice, first without starting value, then with the results of the first run as starting values, resulted in the differences shown in Table 34.

Table 34: Starting value sensitivity for Estonia in the task Build regional database

| Class | Number of deviations |

| >zero | 3362 |

| >0.001 | 34 |

| >0.01 | 16 |

| >0.1 | 8 |

| >1 | 3 |

| >10 | 1 |

| >100 | 0 |

| infinite | 0 |

The task also makes a GAMS set mapping linking the items that differ to each of the classes. If the developer would like to investigate particular differences further, (s) he needs to go into the full result file stored in the subdirectory “test” in the main result directory. Doing that for this particular run reveals that all the differences of 10% or more are due to the distribution of FROO, Fodder Root Crops, to various animals (see 0).

If a more comprehensive suite of test should be carried out, there will be a great number of result files. The task “Merge comparison results” can be used to collect the cumulative distribution of differences from many comparison files and build a summary. In order to do so, the merging task needs to know which result files to merge. In order to add the results of a comparison to the list of files to merge, check the box “Add comparison output to cumulative list of comparisons” and make sure you enter a sensible name for the list of comparisons (all on the general settings tab in the GUI).

Figure 47: Items differing for Estonia when restarting Build regional database

If many experiments are executed using the batch execution facility, then it is useful to keep result files apart by using different directories for results, restart points and result input. There are two tasks in the “Tests” workstep that are intended to help there:

- Create experiment folder structure: creates an empty tree of result folders, with one sub directory for each “experiment”.

- Collect experiment results: copies selected results from the experiment directory tree and stores them in the standard capri directory with a file suffix “_”<experiment>, as if the user had run the task with the property “Additional result type identifier” set to “_”<experiment> but with additional control over starting values and data input.