System stability test

This page is going to describe how to use the various tasks found under the work-step “Tests” that is available in the CAPRI GUI for developers.

To be completed within “Stable Release 2”, due March 2018.

Motivation

The only way of knowing that the system actually does what it is intended to do is to test it. Martin (2008) provides a chapter on testing, and distinguishes various types of tests. Tests can be carried out at various level of the system, starting from low-level testing of individual lines of code or code snippets (unit tests), tests of larger code components that are supposed to make well defined manipulations of given data objects (component tests), and testing of to the behaviour of the entire integrated system (integration or system tests). Finally, there are manual tests by real humans (exploratory tests). Some software development environments provide infrastructure for automated testing, including measurements of the fraction of code (not) under unit testing, real-time highlighting of (non-) tested lines of code etc. Many test concepts, such as “component testing” seem more suited for object oriented programming (OOP) languages.

CAPRI is written in GAMS, and GAMS is not OOP and does not provide this kind of technical aids. As a consequence, testing of the code is left to the individual developers, is cumbersome and therefore often neglected. Nevertheless, the underlying ideas are independent of the technical implementation and can, with appropriate effort, be applied also in GAMS.

CAPRI versions from STAR 1.0 contains code and GUI controls that help the developer perform certain technical stability tests. Firstly, it allows the modeller to produce and systematically compare any pairs of results from the model under variations of parameters. This is particularly interestning for comparing the influence of “irrelevant parameters” such as hardware, software and starting points. Secondly, it implements some coding infrastructure to allow for automated execution and comparison of large suites of such binary comparisons.

Pair-wise comparisons

The developer may run pair-wise comparisons manually from the GUI. We illustrate the procedure by an applied example. Assume that we want to test if the task “Build regional time series” for Ireland with base year 2012 gives the same results if executed twice in a row without changing anything in between the executions. We would perform the following steps:

- Generate two result files with different names. The files should be stored in the same folder of the general model results directory. Example:

- Go to the task “Build regional time series” and select Ireland.

- Go to the tab “Debug options” and ensure that the field for “Additional result type identifier” is empty (the default way).

- Execute the task

- Go to the tab “Debug options” and edit the field “Additional result type identifier”. The string you enter there will be appended to the end of the output files from this task, so it should be short and without spaces, for instance “_restart”.

- Execute the task again.

- Look in the result folder (e.g. results\capreg) to verify that you have the two files “res_time_series_12IR.gdx” and “res_time_series_12IR_restart.gdx”

- Go to the workstep “Tests” and the task “Compare task results”

- Select the correct task “capreg_time_series” from the first drop-down box. This is only a label that is used to help the developer sorting a large number of comparisions, and it is used in the result file name as well as in GAMS sets. The user can choose any name for this comparison by selecting “other” and providing a custom name in the box that appears. The custom name should be short and without spaces or special characters.

- Select the name of the parameter that the program should attempt to load from the result files. If that parameter does not exist, a confusing error will result as there is presently no test built in for existence. In our example, select the parameter DATA1. If unsure about the parameter name, just open the .gdx files and inspect it's contents using e.g. the GAMSIDE.

- Select the two files to compare in the file selection drop-downs.

- Execute the task.

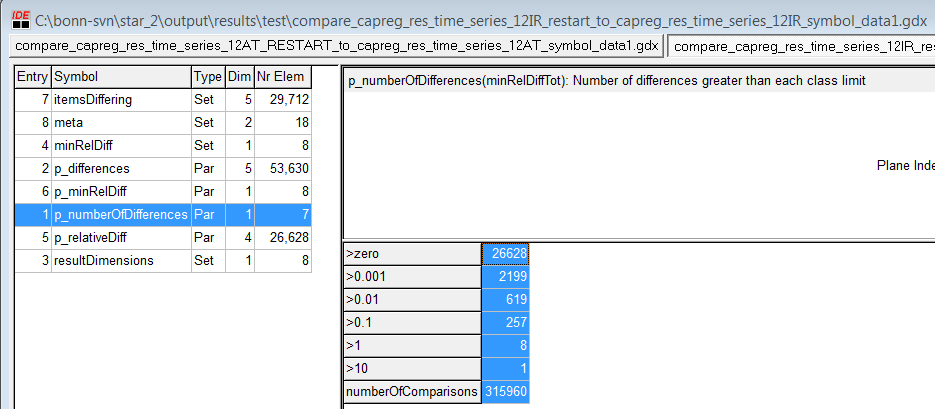

- Open the result file that is now found in the output folder “results\test” and carries a long name composed by the names and symbols of the files to compare, e.g. “compare_capreg_res_time_series_12IR_RESTART_to_capreg_res_time_series_12IR_symbol_data1.gdx”. Open the file in any GDX-viewer of your choice. The GUI has no built in views for this file.

- Firstly, inspect the symbol “p_numberOfDifference”. There you will see the number of differences found, classified by the size of the relative difference (diff2/diff1-1), and with “infinity” where one of the entries is entirely missing.

- Dig into the differences by viewing the set “itemsDiffering” where the particular items that differ are shown for each difference-class.

- Finally, inspect the actual data in the symbol “p_differences”, which is structured in the same way as the “GDXDIFF”-utility of the GAMSIDE.

Figure: The results from a stability test such as the one described above, done with a pre-release version of STAR 2.

Figure: The results from a stability test such as the one described above, done with a pre-release version of STAR 2.

This procedure can be repeated for any task, GDX-file and GAMS parameter. For instance, it can be used to test the influence of different GAMS versions, emptying of the TEMP-directory, turning parallel processing on/off, or any other experiment.

For experiments with different start values or for running chains of experiments (e.g. coco-capreg-captrd), the tab “Debug options” contains fields for overriding the GAMS labels that we use in the code to steer where to pick up inputs and write outputs, namely “results_in”, “results_out”, “restart_in”, and “restart_out”. This is further discussed in the subsequent section.

Multiple comparison suites

The GUI can be used to produce semi-automated tests based on batch-execution of many pair-wise tests. For this, it is important to keep track of naming and storage places for result files, which is where the test tools of CAPRI come in handy.

The following steps are needed in order to complete the tests in STAR 2:

- Create a directory structure to hold all the simulation results. Use the task “Create experiment folder structure”, and provide a path to the place where the directory tree should be created. The directory itself should not yet exist.

- Run the experiments. This is facilitated using the batch file “system_stability_experiments.txt”. By editing this file, individual tasks can be turned on/off. Please note that all experiments except “clean” uses the results of “clean” as start values, therefore “clean” must be complete for the routine to work.

- Collect all the result files in one folder in your Capri output directory. This is facilitated by the task “Collect experiment results”.

- Run all the binary comparisons using the batch file “Test_stability_compare_task_results.txt”. This produces a lot of gdx files with comparisons, and adds each comparison to a list of all comparisons (all_comparisons.txt).

- Create a summary of all the binary comparisons by executing the GUI task “Merge comparison results”. Note that this relies on the list all_comparisons.txt, which is used as a set in GAMS to loop through all the gdx-files created.

- Now you should have a summary of all tests in “output/tests/all_comparisons.gdx”

References

Beck, Kent (2003) Test driven development by example. Electronic book, google it!

Martin, Robert C. (2008) Clean code. Prentice Hall.